VR Spellcasting

Project information

Description

I've always wanted to work with both VR and AI so I decided to combine them. With this project I present to you a minigame that teaches you how to use the VR environment to cast spells. The point of the project was not for spell casting itself. It was meant for using a gesture recognition machine learning algorithm to power all kinds of mechanisms inside of games. By tweaking parameters and adding your own scripts, it can be adapted to work with any game. It comes with a way of creating your own dataset with drawings and a side program that trains a model and then converts it to a model that works with the unity ML api.

Additionally I'd like to mention that for this project I used the Unity XR toolkit. Not the best api to use but it's unity's attempt to making a universally working VR api and that it does succeed at.

- Time: 10 weeks

- Team structure: 1 programmer

- Platform: Unity Engine, PyCharm

- Language: C#, Python

- Roles: AI programmer, VR Programmer

Details

Casting System Tool

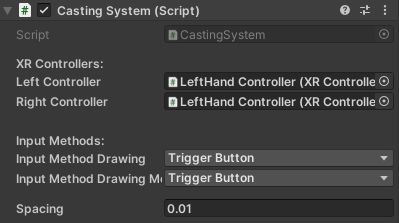

The casting system is the first thing I worked on. This is the system that allows you to draw shapes in the air. This was made completely modular to both account for single stroke drawing and multi stroke drawing, the smoothness of the lines, which controller to use and which button to use.

Symbol Rasterizing

To be able to feed the AI a symbol, I needed a way of capturing this symbol onto a 2D plane. To do this I decided to add another camera slightly behind the player that only renders the layer that the symbol is drawn on. This then gets rendered onto a render target and lastly converted onto a texture2D. One problem I had during this process is that I wanted my camera to capture everything using orthographic projection. This unfortunately was not possible due to the fact that the XR Toolkit package has a script that converts all cameras in the scene to perspective projection and sets it to a certain FOV at all times. Due to this the camera is a little bit less accurate with recording the drawing. (will be fixed in future versions)

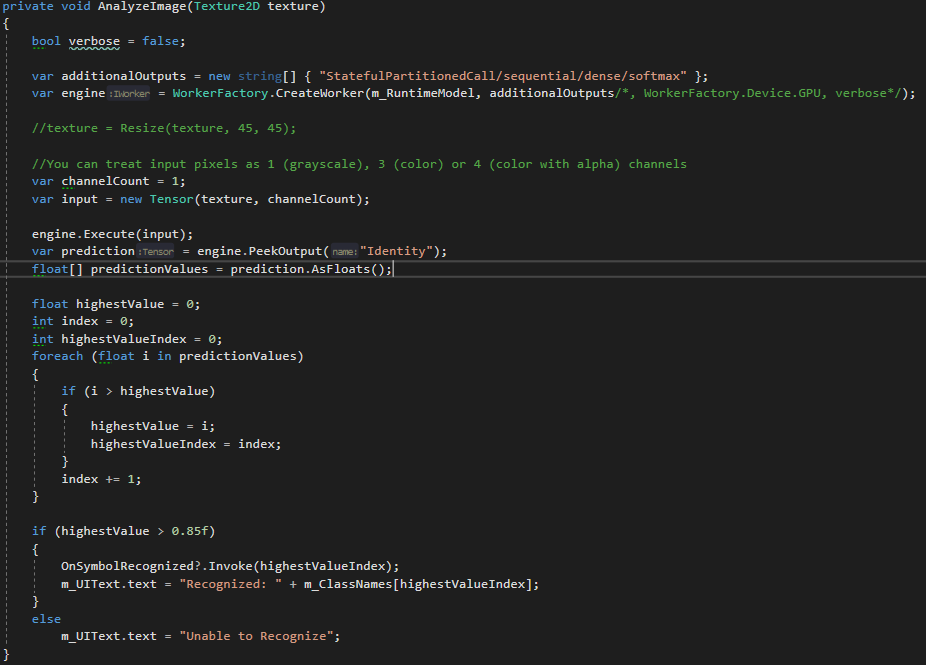

Barracuda AI model loader

To load in the AI model we use the Barracuda package that is include with the MLAgents api. It takes in a texture2D and converts it to a tensor. Which is what a model takes as input. Afterwards we get an array of predictions which are numbers from 0 to 1. It then compares them to find the highest valued prediction and checks if it has a higher value than 0.85. This we do to check if any of the values are accurate enough and if so then invoke the callback function that allows for anyone to add their own scripts to this one.